The next major advance in the realm of drone warfare is being rapidly incubated and could flood into war zones, and become a huge security problem in non-combat environments too, in the near future.

What I am going to describe here is not really new to our readers. I have been talking about it for over a decade and Hollywood has portrayed it, often poorly or unwittingly, but we are now approaching the threshold of it becoming a widespread reality, with all the implications that come with it.

It’s not even a major challenge to accomplish, but that’s the point. In fact, it’s already being used to a limited degree on the battlefield today.

Spurred by rapid technological evolution due to conflicts abroad, this capability could also leapfrog academic debates on weaponized artificial intelligence and the ethical need to keep humans ‘in the loop’ when making deadly decisions.

Simply put, comparatively inexpensive ‘lower-end’ drones, both longer-range and short-range types, will soon be choosing their own targets en masse. The downstream impacts of this relatively simple-sounding revolution are massive and also often misunderstood. Furthermore, the technology is already here and could soon be obtained by non-state actors and nefarious individuals, not just nation-states. Any claims that state-to-state international arms treaties or traditional regulation can stop, or even significantly slow, it from happening are debatable at best.

How We Got Here

The threat posed by lower-end drones is where I have focused much of my work over the years. For a good part of those years, many were in denial of what was to come and even laughed at the idea that relatively cheap drones, even those bought right off the shelf, would dominate the battlefields of tomorrow.

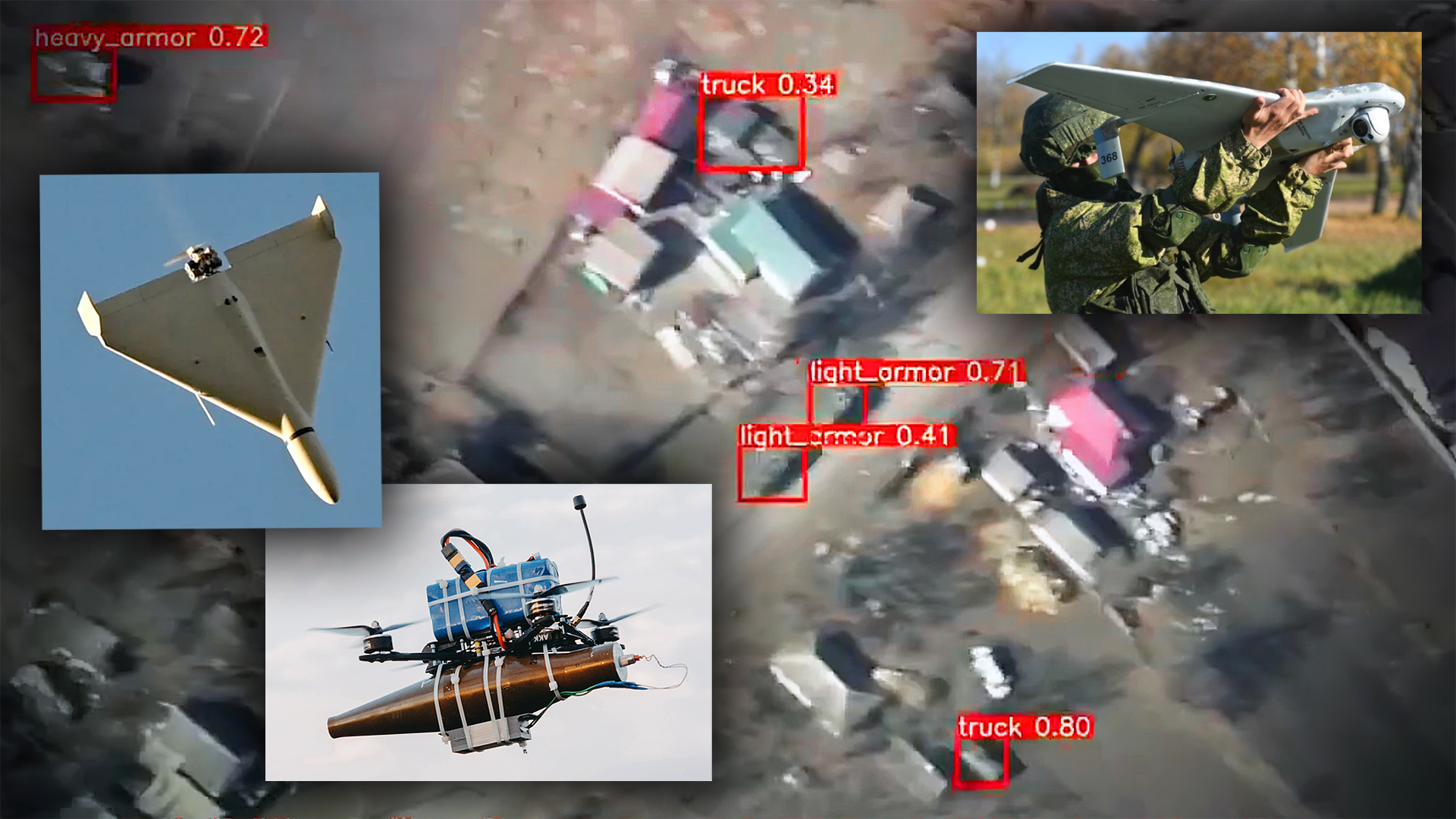

The rapid evolution of drone warfare and the weaponization of commercial and hobbyist drones has since become a stark fact, as has the proliferation of increasingly capable long-range ‘kamikaze‘ or ‘one-way attack’ drones that blur the definitions of drone and missile. Both of their impacts on modern warfare are now snowballing.

The lower-end drone ‘problem’ that was largely ignored, and even scoffed at, for years is now among the top issues facing friendly and enemy commanders and their troops in the field — and it’s a staggeringly vexing one.

The United States, which has placed so much emphasis on totally “owning the skies” in any airspace it wants to operate in, no longer has that luxury. It’s gone. Anyone who claims otherwise is lying to your face or is basing their opinion on what was, not what is, and especially not on what’s about to come. Don’t take my word for it, take that of America’s top commanders.

The loss of this critical edge is not due to next-generation stealth fighters that cost many billions to develop and produce or advanced air defense systems that fire missiles with multi-million dollar price tags. While U.S. supremacy in these areas has been degraded too, the loss of owning the skies is a result of everything from off-the-shelf drones that are modified into weapons to long-range one-way attack ‘kamikaze’ drones that have ‘democratized’ the access to precision aerial strike capabilities, even at standoff ranges deep into highly contested territory.

In other words, total air superiority has been lost by the most ‘unsexy’ and accessible of capabilities.

The Pentagon largely sat on this problem for years until early versions of ‘suicide’ and bomblet-dropping drones were literally wreaking hell on the battlefield the better part of a decade ago by raining down on friendly forces in Mosul, Iraq. A startling lack of imagination and myopic prioritization on traditional threat pacing based largely around high-end, ‘exquisite’ systems put the U.S. far behind the curve, and it has been playing catch up ever since. Even as major strides have been made on multiple fronts to address this issue in recent years, the introduction of fully autonomous, low-end drones will nullify some of those advances.

The Rise of Automatic Target Recognition

The basic idea of Automatic Target Recognition (ATR) for weapons guidance is anything but new. The concept has been around for a long, long, time in various forms and definitions. Its use by some types of cruise missiles for decades is most relevant to this discussion.

The relatively crude by today’s standards DSMAC — Digital Scene Matching Area Correlator — allowed an infrared sensor onboard the Tomahawk cruise missile to scan the terrain below so that it could match pre-programmed images in its memory bank to aid in navigation and target acquisition.

Decades of use of DSMAC and other imaging recognition systems have evolved the basic digitized image matching/correlating autonomous targeting concept to be far more capable. This is due to improved imaging infrared sensors, computing hardware, and onboard software logic that can even allow the missile to pick out its target when unforeseen factors would have caused older missiles to abort their attacks or miss altogether. They can also attack far more precisely, picking out not just the general target but the pre-programmed point on that target to strike. There does not need to be a human to control this functionality, it can be autonomous once the missile is on its way.

Some of these missiles have a man-in-the-loop (MITL) control option, but, in most cases, that requires an aircraft with a datalink to be airborne within line-of-sight of the weapon (usually the launching aircraft) as it makes its attack run. Other cruise missiles, like newer versions of the Tomahawk, can allow for user interaction in-flight by feeding imagery and other data back to controllers via satellite datalink, but these are advanced systems whose baseline capability still uses autonomous targeting via the methods described above.

Regardless, these missiles are made to attack a single target or a small set of alternate targets that are programmed into them prior to launch. These intrinsic limitations have kept them from the scrutiny associated with the morality of truly autonomous weaponry.

In most cases, a lot of ‘weaponeering’ and mission planning goes into the use of these weapons. The missile is programmed to look for a specific target in a specific area and to prosecute that target or abort doing so if its matching parameters are not met. More advanced systems go a step further, such as some focused on anti-ship applications, that can potentially ‘look’ for a number of target types loaded into their imagery/model library and choose one based on parameters set prior to launch. These systems, and others that use radar seekers capable of generating synthetic images of the target, are closer to the autonomous capabilities we are discussing in this piece, but weapons that currently feature them are large, cost hundreds of thousands or millions of dollars each, and are used for highly specific and clearly defined applications under a set of rigid circumstances.

Today, missiles like Storm Shadow/SCALP-EG, Joint Air-to-Surface Strike Missile (JASSM), advanced Tomahawk models, Joint Strike Missile, and Naval Strike Missile, as well as others, use image/scene matching/correlating ATR technology. Their infrared seekers cannot be jammed using radio frequency countermeasures and they cannot be detected via radio emissions from an onboard radar seeker as they do not have one.

For decades, DSMAC and similar image-matching targeting concepts were considered state-of-the-art technology, but today, even in their advanced form, where they can overcome targeting challenges not possible in prior iterations, they are something of a relic — or at least a clunky precursor of what’s to come.

In the present, digital image recognition is part of our everyday lives with advanced algorithms put in place to do all kinds of things from unlocking your iPhone to finding photos online. Modern surveillance, in general, makes massive use of automated target recognition of many types — from visual to radar to signals. Machine learning can ‘teach’ software how to not just detect certain objects in images and videos, but how to classify those objects into different subcategories and apply complex follow-on logic based on what is detected and identified with varying certainty. These concepts are making sifting through increasingly massive mountains of data possible.

Enabling hardware for these capabilities is only becoming cheaper, more prevalent, and compact, and the software is becoming more accessible, too.

So it really comes as no surprise that these capabilities are being directly ported over to lower-end drones, with the ability to autonomously detect, classify, and potentially target a vast array of objects on the ground. And they are quite good at it. In fact, many of the same types of drone capabilities are used for commercial/industrial purposes, like inspecting large swathes of utility infrastructure or railroad lines.

These developments are very much top-of-mind for militaries around the world as the technology is proliferating. It is already being raced into the fight in Ukraine, where Moscow and Kyiv are scrambling to leverage AI-infused lower-end drones, including to kill, in order to help turn the tide of the war.

Cutting The Tether Changes The Game

To most, the fact that a person can fly a drone dynamically over the battlefield, even chasing down cars and people, weaving through windows, and threading bomblets through hatches on tank turrets, is an incredible leap in warfighting.

It is.

As noted earlier, the drone revolution has ‘democratized’ precision-guided and standoff strikes. What was once a huge financial and technological barrier to obtain such a capability is now nearly non-existent. These same classes of drones have also changed how lower-end ‘near’ battlefield surveillance is executed in so many ways.

Taking the human out of the loop opens up a massive new frontier, and a troubling one at that. This is what’s so important to understand. The man-in-the-loop (MITL) control concept is hugely restrictive on what lower-end drones — including longer-range kamikaze types — could potentially do. This is especially true if you are trying to hit a dynamic target or target of opportunity at significant distances. These barriers rapidly degrade when the drone has the ability to pick its own targets using commercially available sensors and onboard artificial intelligence-enabled hardware and software.

The vast majority of first-person view (FPV) kamikaze drones, loitering munitions, and bomblet-dropping drones that go after moving targets or targets of opportunity are equipped with man-in-the-loop control. Someone is manually flying (or more like directing) these weapons in real-time and selecting their targets. Those that are not controlled in this manner are assigned to hit fixed targets, basically a set of coordinates on the map. The biggest downfall of the former’s arrangement is the need for a continuous line-of-sight datalink between the drone and its controller. This results in the aforementioned limitations in these drones’ abilities and their resulting potential applications on the battlefield.

If that communications link is lost, it can possibly be reestablished with the drone after it enters into a pre-planned holding pattern. It could also be programed to return back to a predesignated point, but either way, the mission cannot be completed. Even under a semi-autonomous concept of operations, where controllers keep an eye on the drone’s progress and approve targets without directly flying it moment-to-moment, the drone would still need a way to communicate with controllers so that they can see what it sees. Satellite communications add cost and complexity, especially in terms of the size of the drone needed to accommodate them, and in most cases, it just isn’t possible to add that capability. Advanced networking over large portions of the battlespace is another capability that can potentially overcome these issues, but it is far out of reach for the vast majority of nation-states’ armed forces, and drastically increases the complexity of operations and the hardware being employed.

Line-of-sight control over distance can also be problematic in anything but the flattest of terrain. It means that the controlling party needs to be in close proximity — within a dozen miles or so in many cases, and usually far less — of the drone. In higher terrain or urban areas, operations can be severely limited, although leveraging public cellular data networks can overcome this with properly equipped systems, but only in some environments, which usually are not full-on war zones. While conspicuous towers, aerostats, or other drones can work as elevated relays to help with maintaining line-of-sight connectivity over longer distances and in more complex terrain, they also make operations more complex, and in some cases far more vulnerable. They also introduce another critical point of failure.

So, just to clarify, line-of-sight, or LOS, control is for close range. This is what most FPV, near reconnaissance, and bomblet-dropping drones you see in Ukraine utilize. Then you have relay line-of-sight, or RLOS, which uses another or multiple drones, aircraft, or aerostats as elevated relay platforms to extend line-of-site connectivity. This concept is also in use in Ukraine to a degree. Then you have beyond line-of-sight, or BLOS, which is for longer-range operations or where no relay exists at all. This usually requires satellite datalink or the ability to leverage persistent high-bandwidth ground-based infrastructure — cell phone networks.

The simple two-way datalinks between common radio-controlled drones and their operators can also be jammed. Just using drones that require near-continuous connectivity can alert the enemy of the drone’s presence via these emissions. The source of its control can even be rapidly geolocated and targeted, which is becoming a more pressing issue. Using these systems under strict emissions control protocols is, in many cases, impossible.

While the simple line-of-sight control arrangement may have major conveniences, especially for very short-range operations, as well as major economic efficiencies, it also has huge vulnerabilities, glaring limitations, and significant drawbacks. On the other hand, drones that can pick and attack their own targets give off no communications emissions, which makes them less detectable, and thus more resilient and survivable.

With all this in mind, you can see how allowing drones to fly to specific areas, even over great distances, to hunt for their own targets and autonomously devise attack solutions, opens up the aperture dramatically for what they can accomplish. They need no connectivity with operators at all and cutting this invisible tether unlocks their potential, for better or worse.

Same Form, New Function

Long-range kamikaze, or ‘one-way’ attack, drones, like the Iranian-designed Shahed-136 that has been the scourge of Ukraine’s air defense apparatus, are already operating without a man-in-the-loop control to hit fixed targets. They are truly ‘fire and forget’ weapons. If these types of drones were equipped with the ability to look for their own targets, they could use their high endurance to hunt for targets of opportunity, not a dozen or so miles from their launch locations like current smaller MITL kamikaze drones, but hundreds of miles away. And they can do so with considerable time on station to execute a thorough search of a defined area. They could even return home to be reused if no target is found, if they have the range to do so, or divert to a secondary fixed target pre-programmed for attack prior to launch. Otherwise, they could self-destruct.

The ability would open up dynamic targeting deep in contested territory. For Russia, for instance, this would be a huge advantage as it has failed to gain air superiority over Ukraine and has very limited ability to hit non-static targets far beyond the front lines. The same can be said for Ukraine. It could hunt for and strike land Russian vehicles deep in occupied territory, something it cannot do today because it too lacks air superiority and is confronted with the dense air defense overlay that sits atop the entire region. Flying these drones deep into those air defenses makes sense even if they do not complete their mission. A long-range kamikaze drone may cost thousands of dollars, but the high-performance surface-to-air missile that knocks it down will likely cost much more and would take far longer to replace.

Waves of similar drones could be sent to their own individual geographical ‘kill boxes,’ or defined areas of engagement. Collectively, they could put enemy targets at risk over a huge area persistently without ‘doubling up’ and attacking the same target twice. Using machine learning/AI and associated hardware they could not just identify targets of interest, but also differentiate moving from still targets, to ensure they are indeed active (not destroyed or already damaged) vehicles. Meanwhile, they can be set to engage other target types, such as surface-to-air missile systems or other high-priority targets, regardless of whether they are static or not. Even troop movements on the ground could potentially be recognized and attacked. All the parameters as to what the drone can engage, and where it can do so, can be defined and tailored to each mission before launch.

Such a capability could significantly suppress movements of forces far behind the front lines, as precision attacks on moving forces can happen literally anywhere at any time, and in significant volumes.

There is also already a precedent for this in the form of one-way attack drones that are equipped with radio frequency seekers that operate in an anti-radiation role. They seek out the signals coming from air defense systems and hone in on them, flying right to the emitter and detonating. This capability, which originates from Israel, has existed for many years and is now proliferating around the world. While it is far less flexible than using visual targeting to hunt for a much wider array of targets, it still is based on the same principles and leverages the same advantages. No man-in-the-loop is needed. There are even some drones that have optical and anti-radiation targeting capabilities, combining the best of both functions, but these are a rarer variety that, once again, Israel has pioneered.

It is also critical to highlight that we are not talking about swarming capabilities here. While advanced, networked swarms get the most attention these days, they would be another big leap over the capability being discussed. Just giving lower-end drones the ability to autonomously engage targets is a far more glaring threat, which could potentially proliferate rapidly in the near term. Take the same self-targeting capabilities discussed above and networking drones that possess them across a vast area, and you have a fully autonomous, mesh-networked, cooperative swarm that can bring many more devastating capabilities and combat efficiencies to the table. But this also introduces great complexity, cost, and even some forms of vulnerability, although with massive advantages.

In other words, the barrier to entry for this type of capability is much higher than just giving the drones the ability to look for prescribed targets and strike them within a set geographical area individually.

Taught To Kill

The software loaded into drones with autonomous targeting capabilities could be trained to become extremely proficient at spotting, positively identifying, and attacking prescribed targets. Training scenarios can be repeated many thousands or even millions of times over in virtual simulations, under every circumstance imaginable, to improve the software’s ability to discern between an intended victim and objects it should not engage. Parameters can then be set based on that data as to what level of certainty (or correlation) the drone would need to prosecute a target its software-hardware combination has been trained so thoroughly to attack.

The probability of match settings is an intriguing thing to ponder in its own right. As a theoretical example, different users with different moral and tactical imperatives using the same drone could differ in their selected settings wildly. One user that is fighting a very high-stakes conflict could set that value relatively low in order to maximize the potential number of attacks. Another that is engaged in a conflict with lower direct stakes and/or that has major pressures in terms of preserving innocent life and property, could set that value very high. Even at its most stringent, but still operationally relevant settings, there will be the potential for horrific errors to occur, but the same can be said about human operators too, which may even be less reliable in this regard.

All this is also enabled by increasingly powerful, lightweight, and affordable optics that smaller drones can carry. The commercial drone sector has seen incredibly rapid evolution in this regard that spills directly into military applications. The use of millimeter-wave radar seekers is also a possibility, which would be a major boon for all weather operations, but optics is really a more attainable focus here.

While this is a very simplified summary to paint a picture of the issue, this is where the future of lower-end autonomous aerial warfare is headed.

What you end up with via the injection of machine learning/AI into lower-end weaponized drones are far smarter, more dynamic, and far less predictable weapons that are harder to defend against. With the current ‘man-in-the-loop’ shackles cast off, these weapons will take on a much more impactful role on the battlefield of tomorrow than what they have already achieved.

The aforementioned reality that this type of capability is already emerging with greater emphasis on the battlefield is telling of how fast things are moving now, but in some cases, a form of automated targeting using electro-optical sensors has been in use for years on small kamikaze drones.

The Switchblade series, for instance, can fly itself to its target once it is designated by a human. This has many advantages, including overcoming the loss of line-of-sight connectivity very close to the ground during the weapon’s terminal attack. It is especially helpful for moving targets. As such, it is more of an assisting feature that improves probability of kill than anything else. The war in Ukraine has seen both sides working to operationalize drones with much more autonomy, potentially with no requirement for a human to designate the target prior to the drone making its terminal attack run.

A Harder Target

Defending against lower-end autonomous drones is a major problem for many reasons, and not just on active battlefields. Without a man-in-the-loop and a two-way active datalink, detection, and defense, whether via soft-kill (like electronic warfare) or hard-kill (kinetic, like missile and gun systems) methods, becomes more problematic. The aforementioned fact that autonomous drones with longer-range capabilities can fly so much farther to specific areas to hit targets of opportunity means that they can pop up in many more locations, which greatly complicates the allocation of precious defense assets. You simply cannot defend everywhere at once, and for the longer-range variety of these systems, beyond a high concentration of air defenses, frontlines would have no meaning.

Highly localized command link jamming becomes ineffective if the drone is operating autonomously. Even just switching over to autonomous control during a drone’s terminal attack run, as Switchblade can, makes highly localized jammers on the vehicles — like those popping up now on vehicles in Ukraine — relatively useless while also adding the benefit of more accurate strikes on moving targets. Fully autonomous drones would be even less challenged by localized command-link jamming because they don’t have any at all.

While disrupting GPS over a wide area can also have an impact on some autonomous drone systems, those issues can also be overcome in many instances, including using simple established means for maintaining basic flight during such occurrences. Off-the-shelf optical sensors and onboard instrumentation can help even off-the-shelf drones stay aloft and on mission autonomously while GPS is disrupted. When assisted by AI, these capabilities can be made even more robust. Full-on inertial navigation systems have also become incredibly small and light, giving militarized drones even greater potential for GPS independence.

As we noted earlier, parameters can be set prior to a mission as to what the drone is to do if it were to lose GPS reception. In areas where geographic sensitivities don’t exist, the drone could go about its mission with degraded positioning. In more sensitive operating areas, it could be programed to loiter or fly towards a direction in an attempt to regain a high-quality fix, attempt to return home, or self-destruct.

AI can also help with overcoming mission disruption via GPS spoofing by using superior logic to better recognize when spoofing is occurring and subsequently throwing out the erroneous input as it deviates from other navigational data sources.

Still, jamming or manipulating GPS over a wide area could become even a bigger imperative once self-targeting autonomous kamikaze drones become more widespread, even if it will only work to degrade some types’ capabilities under certain circumstances. But it’s worth remembering that doing so can also drastically impact a military’s own combat and logistical capability, so it can be truly a double-edged sword. Regardless, GPS spoofing is set to see much wider use in the war in Ukraine, especially due to Russia’s continued use of long-range kamikaze drones that leverage relatively low-end components that could potentially be thrown far off course via the tactic.

There is also the fact that drones with autonomous targeting capabilities can be used by non-state actors and even criminal organizations, the latter of which are already using the same drone capabilities seen on modern battlefields in terrible ways.

Providing lower-end drones with dynamic targeting over long distances without the limitations of line-of-sight connectivity opens up a vast array of domestic threat scenarios that will be very hard to deal with. Especially considering these drones will be able to pick out very specific moving targets in defined areas while the launching party left a far-off launch site long ago. The attacking autonomous drone would give off no emissions that could help alert even protected high-risk persons and assets as to its presence, too. Once again, the threat aperture just opens up in nearly every way, especially geographically.

The Great Morality Debate

The moral implications of these developments are disturbing. While the U.S. military has maintained that it intends to always keep a human-in-the-loop for life-and-death decisions, this is a luxury many other actors will have no problem with jettisoning — by choice and/or tactical necessity. Once again, we are already beginning to see this happen in Ukraine. In doing so, an adversary could gain advantages on many levels.

While talk of potential arms limitation treaties and regulations pertaining to artificial intelligence-infused weaponry gets a lot of headlines, they are often framed in a very high-end technological sense. The idea that small or relatively cheap drones that cost a fraction of a guided missile can make widespread and very deadly use of relatively basic AI capabilities makes the argument for such treaties far more complex, especially in terms of enforcement and containing proliferation.

Certainly, while some types of technologies and components could be more heavily restricted in terms of export controls and trade regulation to help in this regard, these capabilities are going to be impossible to restrain on a grand level. Most if not all are dual/multi-use. Then there is the real concern that even among major powers that could enter into an autonomous weapons ban agreement, the apple might be just too sweet not to take a bite.

If the success of major military operations in the future is significantly defined by the use of autonomous weapons — Vladimir Putin clearly thinks it will be and China may outpacing the U.S. in this regard, too — then giving the adversary a possible major advantage will likely be untenable. Building uncrewed systems that feature man-in-the-loop control and are capable of striking targets over considerable distances would, in most circumstances, be far more costly than autonomous ones. As such, an enemy could have an absolutely massive quantitative advantage by going after the cheaper autonomous solution, even if it is less tactically efficient. As we have talked so much about over the years and as we see demonstrated starkly in Ukraine, quantity has a quality all of its own. This is especially true as defending against low-end drones is highly problematic and costly to begin with.

The great drone production race that is occurring between Russia and Ukraine is a glaring example of this. It is very much a numbers game, not one of overwhelming quality. In fact, FPV drones are now talked about as being on par with artillery in terms of how critical they are to the fight on the front lines. This alone is an incredible and rapid development.

So, if one party can strike moving targets autonomously, even hundreds of miles away, without all the complexities of keeping a man-in-the-loop and can build many more drones than the other party that insists on avoiding deadly autonomous engagements, the advantage becomes clear.

The U.S. may be something of an exception here because it can afford the luxury of procuring more advanced systems at scale, and it will have the advanced networking architecture to support them, but there are limits to how far that would offset an enemy’s mass production of simpler autonomous drones. And the inherent vulnerabilities to the MITL control concept are a whole other issue to contend with.

Then there is the question of how would any sort of regulation or treaty to curtail the proliferation of lower-end autonomous uncrewed systems with deadly capabilities be enforced. As AI’s many other uses will explode in the coming years, as well as the hardware and software needed to support those applications, and the basic parts for lower-end drones being so accessible or easy to produce, what would an enforcement regime even look like?

If the use of low-end autonomous drones with deadly capabilities suddenly explodes on the battlefield and quickly becomes a staple capability like weaponized FPV drones have, it will be tougher to regulate even higher-end autonomous weapons in the future. If the metaphorical ‘genie is already out of the bottle’ for throngs of low-end drones with relatively basic capabilities that are picking off their own targets, the argument for stopping far more advanced and discriminating systems from doing the same may become even more tenuous.

Yes, this is all quite disturbing. Making things worse, I have seen this emerging reality defined poorly in the press repeatedly, making successfully conveying its actual implications harder. It seems that stories either make constant references to “Skynet” and AI taking over the world, or they offer very limited looks into specific high-end applications of AI’s potential weaponization that miss the simpler and arguably more pressing threats. In some ways, it is mirroring how the danger posed by low-end drones was largely misunderstood until it materialized in full force.

There are caveats to some of the general points I have laid out in this piece as this is a very broad, conceptual overview of the topic. None of them change that all indications point to this being the natural evolution of multiple tiers of lower-end weaponized drones.

So, while the coming swarm of advanced, interconnected, cooperative, autonomous drones may be of great fascination, and rightfully so, a much less ambitious technological leap in unmanned aerial warfare is arguably just as big, if not more of a threat, and it is already buzzing its way over the horizon.

Contact the author: tyler@thewarzone.com